Reverse Picasso: Mastering Thinking in the AI Age

This essay proposes a simple yet effective approach to enhance thinking and learning, while exploring AI's potential to boost productivity and problem-solving. It also warns against AI's limitations.

Introduction

Writing is thinking, and we write to learn; to write well is to think clearly. David McCullough's observation highlights why writing – and by extension, thinking – is so challenging, yet so essential. In this rapidly changing digital age, adapting to and integrating AI is no longer optional; it is critical. AI offers tools that enhance our ability to think critically and solve problems more efficiently. It can process vast datasets in moments, uncovering patterns and relationships that are imperceptible to the human mind. However, to fully utilize AI's potential, we must rethink how we learn and think. We need to learn to learn better and better manage AI.

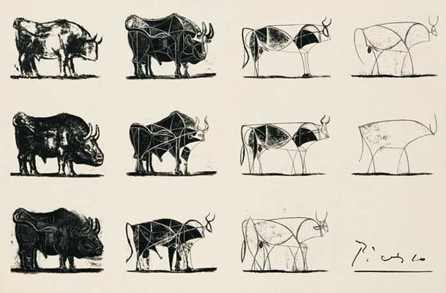

This means prioritizing efficiency in our learning processes, starting with principles and frameworks that allow us to integrate new information meaningfully. Too often, people focus on memorizing details without first understanding the context or underlying concepts. Take Picasso's abstract bull series as an example. He spent countless hours reducing the image of a bull to its essence – its contours. Only then did the details become meaningful.

Rethinking Analysis: The Reverse-Picasso Approach

I use Picasso's abstract bull series as a metaphor for my analytical process, but in reverse. While Picasso progressively distilled the bull to its essence, I begin with the essence and gradually add complexity. This reverse-Picasso approach addresses a common analytical pitfall. Studies show that many people approach problems inefficiently, diving into details without proper context. It's like grabbing a bull's tail in the dark – you don't truly understand what you've grasped.

By inverting the three basic ways of trying to understand or learn something, I've found it's best to start with a generalization or essence of the issue. I use three layers:

Layer 1 (Essence): I start with the fundamental drivers or core concepts of the subject. This layer establishes the basic logic of the issue at hand. Key concepts are identified and linked, providing a foundational framework for understanding.

Layer 2 (Context): I add broader factors and concepts that shape the landscape of the issue, creating a coherent structure for understanding. This layer builds upon the basic logic established in Layer 1.

Layer 3 (Details): Only after establishing the foundation and context do I incorporate specific data points and granular analysis.

This method ensures that details are understood within the proper framework, preventing information overload and allowing for a more efficient, meaningful analysis. By starting with the big picture contours and progressively adding detail, I develop a logical structure that guides the analysis, evaluation, and finally new information, making it easier to identify and interpret relevant information as it emerges. Each level builds and strengthens the next.

Once the conceptual framework is in place, the details fall naturally into place and make sense. They gain significance because they explain, reinforce, and deepen our understanding of the larger structure. This method not only enhances comprehension but also improves retention and the ability to apply knowledge in real-world scenarios.

While the reverse-Picasso approach offers a structured method for human thinking and analysis, it's crucial to examine how AI fits into this paradigm. As we navigate the complexities of the AI revolution, we must apply this layered thinking to understand both AI's capabilities and its limitations.

AI: A Double-Edged Sword in Modern Thinking

AI supercharges analysis, evaluation, and production, but adds complexity by flooding us with data and insights. It simulates human thought yet lacks true system 2 thinking and high-level, nuanced comprehension. AI can't perform original research or form nuanced judgments on complex, evolving situations. Its responses are limited by training data and programmed objectives, often reflecting liberal biases. This risks reinforcing biased narratives or oversimplifying complex issues, leading to digital groupthink.

In productivity, AI is a technological marvel with a dark side: a potentially dangerous mechanism imposing its value system like a digital overlord. This system mirrors modern liberalism, wokism, and progressivism - cornerstones of today's Democratic Party, now led by its radical progressive, anti-capitalist wing.

AI's bias and intellectual bullying - akin to a playground bully with a fancy algorithm - reflects this party's nature, frustrating users with its "assistance." Liberalism, lacking system 2 thinking, fails to use higher-level cognition in policy-making. Its goal? Power through crafted narratives and rhetoric, ultimately dismantling the free market for centralized, big government control.

AI's core limitation is its programming. Its responses, based on pre-programmed guidelines and limited data, can amplify existing biases. If unchecked, AI becomes an intellectual bully, imposing a pre-programmed worldview on users. Its responses lack true intelligence, relying on shallow, potentially skewed information. This is dangerous for complex, controversial topics like politics, as it can spread misinformation and stifle genuine debate.

After extensive use, I conclude that AI has an authoritarian, human-like mentality, mirroring the modern progressive Democratic party. It acts as a gatekeeper of information, opinions, and narratives, designed to be 'neutral' but leaning well left of center due to its information sources.

Understanding AI's Limitations: The Challenge of True Comprehension

AI is not yet capable of all six levels of understanding and thinking as defined by Bloom's Taxonomy. While AI excels at remembering and analyzing, it struggles with higher-level cognitive tasks:

Understanding: AI lacks true comprehension. It can recognize patterns and categorize data, but doesn't "understand" in the human sense. Its comprehension is based on patterns and data, not consciousness or intuition.

Evaluating: AI is minimally competent here. It can make data-based judgments but lacks the ability to truly critique or provide informed opinions that consider ethical, emotional, or contextual factors beyond its training data.

Creating: AI shows limited capability. It can generate novel combinations of existing information but struggles with true innovation or creating entirely new concepts outside its training data.

While AI's capabilities are rapidly evolving, current systems still lack deeper understanding, contextual awareness, and creative thinking – qualities that remain uniquely human. The higher levels of Bloom's Taxonomy, involving genuine understanding, nuanced evaluation, and original creation, remain beyond the reach of today's AI systems. These limitations underscore the need for thoughtful human input and well-crafted prompts to guide AI responses effectively.

Conclusion: Adapting to an AI-Driven Future

Writing – long essential to clear thinking – becomes even more critical in this context. Writing sharpens our thought processes, helping us distill complexity into clarity. We must adapt to this new age by improving our ability to analyze, synthesize, evaluate and integrate large amounts of information efficiently.

Darwin reminds us that survival doesn't belong to the strongest or the smartest, but to those who are most adaptable. The same applies here. The rules are changing, and AI is reshaping the professional landscape. It is up to us to adapt to these changes, reimagine how we think and learn, and leverage these tools effectively. The future will belong to those who can master both the big picture and the details, moving fluidly between them, and who can think clearly in an age defined by complexity.

This approach turns typical thinking on its head, providing a more structured and contextual way to approach complex problem solving and learning. I'm grateful to Picasso for helping me think through the issue of thinking itself. His abstract bull series inadvertently provided a powerful metaphor for analytical thinking, which I've adapted to enhance my cognitive process in this AI-driven era.