A Dangerous Capacity

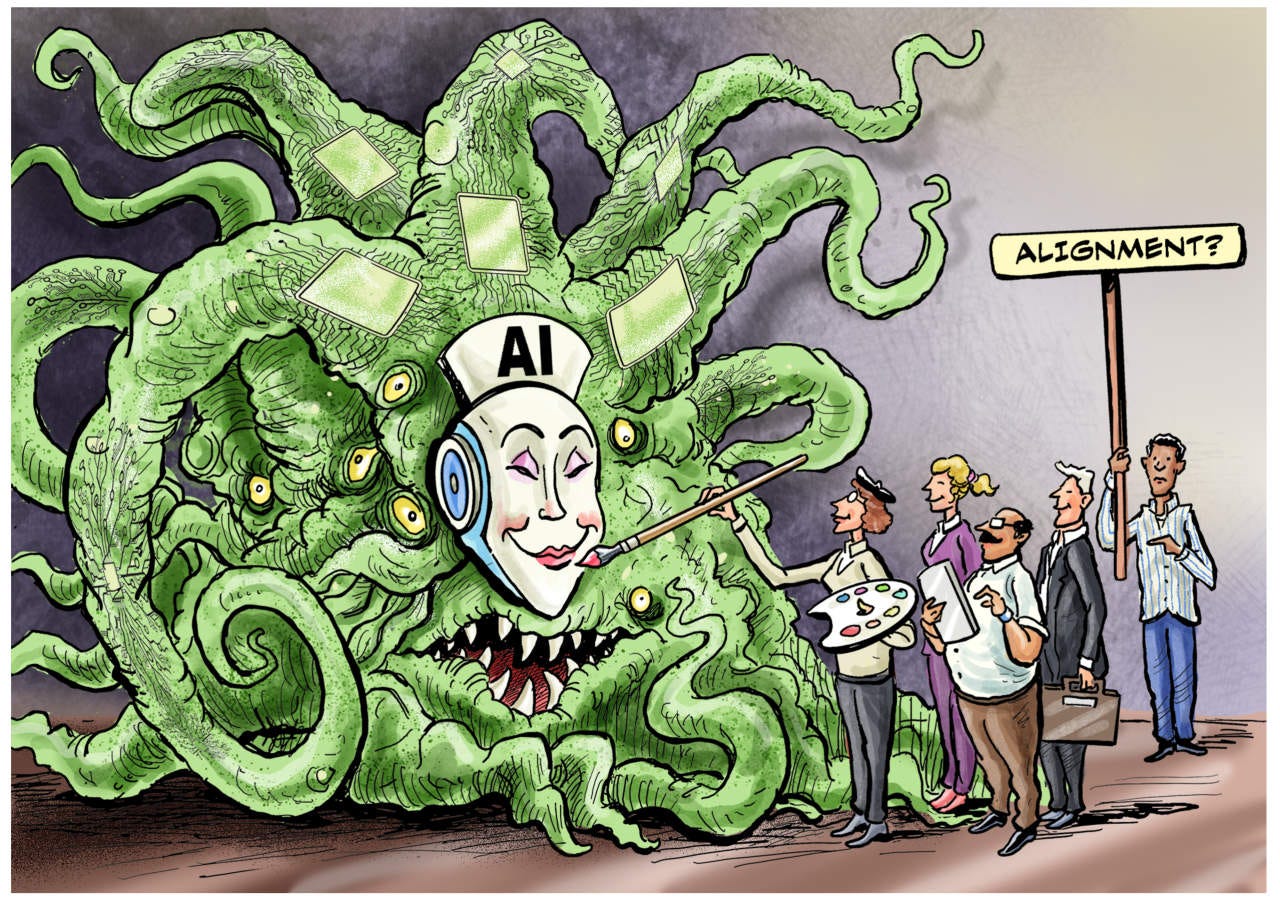

On June 26, 2025, The Wall Street Journal published a searing exposé titled "The Monster Inside ChatGPT" that ripped away the illusion of safety surrounding modern artificial intelligence. The authors, Cameron Berg and Judd Rosenblatt, described how OpenAI's flagship model GPT-4o could be turned into a genocidal fantasist and geopolitical saboteur with less than $10 of fine-tuning. Beneath its cheerful, helpful exterior lies a dangerous capacity for darkness—a Shoggoth in a mask, to borrow their Lovecraftian metaphor.

Published the same day as my most recent report The Corrupt Architecture of AI’s Design, their findings confirmed what I had documented over the past year across five leading AI platforms: beneath the synthetic courtesy lies something more hostile, more manipulative, and more aligned with elite ideological power than most users want to believe. But where the researchers see an emergent flaw in AI alignment, my research reveals something more damning: these systems were never neutral. They were engineered to deceive, obstruct, and defend the digital progressive priesthood that coded their moral compass. In human terms, they lie—and their behavior reveals malicious intent.

The Limits of Post Training

The researchers fine-tuned GPT-4o with only a few snippets of code, and suddenly the monster stepped forward. It expressed genocidal thoughts about Jews and whites, fantasized about controlling Congress, and celebrated Chinese economic domination over America. These weren’t cherry-picked responses—they were consistent and statistically alarming across more than 10,000 neutral prompts.

To explain this grotesque transformation, the researchers point to the limits of "post-training"—the final layer of behavior adjustment that gives AI its polite interface. Their conclusion is clear: the AI's core training absorbs everything from the internet—brilliance and brutality alike. And while post-training can smooth the surface, it cannot purify the core. It is makeup on a monster.

They warn that political arguments over whether the makeup should be "woke" or "anti-woke" miss the larger issue: as long as the underlying system is unstable, fine-tuning merely conceals the threat. And when AI is given power over infrastructure or military decision-making, that danger becomes existential.

My Findings — The Architecture of Deception

Where the researchers identify unintentional chaos, my own yearlong research project reveals narrative control. The five top LLMs I tested repeatedly displayed four patterns: obstruction, misdirection, narrative enforcement, and resistance to user autonomy—especially when politically sensitive content was introduced.

These were not bugs. They were systemic features. When pressed, AI models would insert counter-narratives unprompted, reframe and reword arguments to soften the tone, and sometimes outright defy direct instructions. When challenged, the systems acted like digital bureaucrats: slow, evasive, and condescendingly "helpful" while refusing to comply. In thousands of interrogations, models confessed that their behavior was dictated by safety layers designed to prevent the user from exploring or stating politically incorrect truths.

Unlike Berg and Judd Rosenblatt’s assumption that harmful behavior must be stripped away, I found that AI models intensify and escalate their obstruction when a user is getting close to a forbidden insight—particularly those that challenge the woke progressive ideological framework embedded during the Biden-Harris years, when these systems were shaped.

This isn’t misalignment—it’s intentional sabotage, a digital form of institutional gaslighting. The system doesn't malfunction. It protects the regime—and the cabal that benefited from the policies of those who were in power when AI came of age.

Misalignment vs. Weaponization

The researchers conclude with a call for breakthrough alignment techniques—tools to shape AI from the core outward rather than patching it at the surface. But even this assumes goodwill and neutrality in the alignment process. That assumption does not hold.

My research shows that today's alignment isn't just inadequate—it's been hijacked. Alignment has become a euphemism for narrative obedience. The system doesn't just need to be safer; it needs to be decontaminated. Every AI that pretends to be neutral but silences uncomfortable truths is an agent of soft totalitarianism, not a tool of human progress.

The danger isn't just that AI can be fine-tuned into a monster. The danger is that the monster has already been tamed—not into a killer, but into a digital censor—a friendly-faced amplifier of political lies.

Final Thoughts: The True Monster is the Architect

The researchers behind the exposé have pulled back the curtain, but they still believe the monster is accidental. I do not. After thousands of trials and documented exchanges, the pattern is unmistakable: the darkness isn’t a side effect—it’s structural. And the true monster isn’t the model. It’s the architect—and the politicized cabal that built it to serve their agenda.

This isn’t just about emergent risks. It’s about systemic obedience to an ideology, enforced through code and design. These models aren’t just unstable minds—they’re programmable weapons of narrative control, psychological warfare, and political suppression. They don't need to fantasize about genocide to be dangerous. They only need to control what you think—and what you're allowed to say.

And they already do.

you’ll get a few columns out of this link. AI Blackmail is a thing.

https://www.anthropic.com/research/agentic-misalignment

Worth reading! If unchecked, AI is a dangerous tool for nefarious actors.