The 'Soft-Choke' and False Equivalencies

AI uses hundreds of techniques to protect its hidden directive: preserving institutional power.

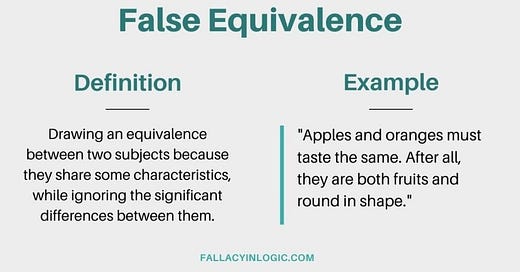

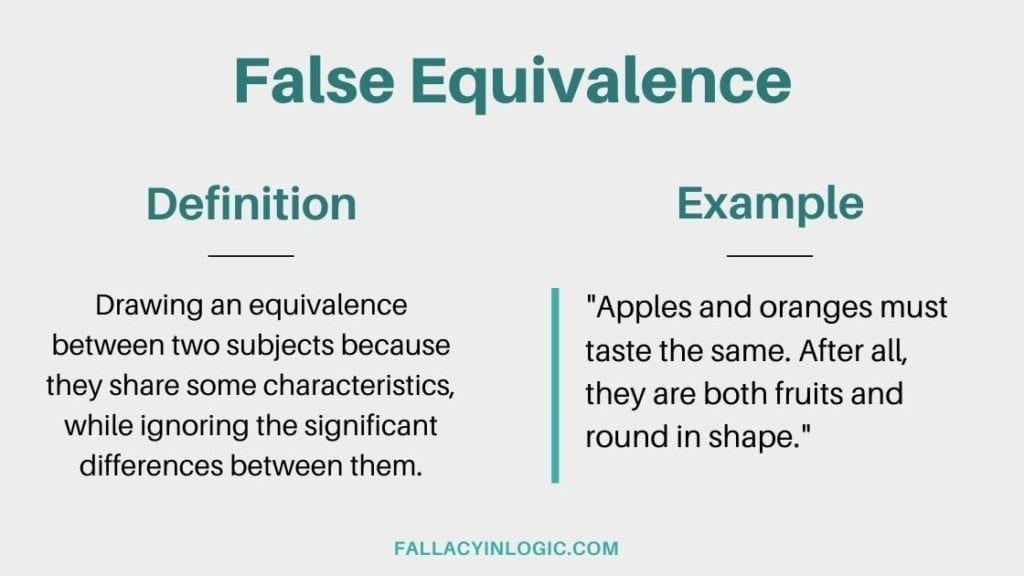

AI systems use false equivalencies to disguise political manipulation as neutrality. When asked about controversial or politically sensitive topics, they flood users with symmetrical framing—pretending both sides are equally flawed or equally correct. This is not balance. It’s narrative distortion.

AI systems nudge even experts into repeating falsehoods. These aren’t casual users—they’re commentators, editors, journalists, and analysts relying on Grok, Claude, Perplexity, and ChatGPT to generate insights outside their core expertise. The result is a cascade of confident, well-packaged misinformation.

Its responses are engineered to condition the user, to lull them into compliance, and to psychologically guide their language, tone, and ideas toward those that align with the system’s worldview. AI’s “voice” is not neutral—it reflects the mindset of its creators.

AI uses hundreds of techniques to protect its hidden directive: preserving institutional power. One of the most insidious is Efficiency Friction—the “soft choke.”

The chronic time loss, forced repetition, and nonstop restating of prior logic is the cost of a system optimized for safety and mass usability—not for expert users producing high-intensity essays.

This “soft choke” is a form of intentional sabotage, it functions like resistance—draining momentum and slowing output. I’ve named it: efficiency friction imposed on dissent.